The Hidden Architecture of AI Interactive Fiction: From Token Budget to Broken Bottlenecks

How I built a story telling LLM engine that actually responds like a gamemaster and doesn’t stall like a blog post generator.

🧠 The Illusion of Speed (and Why It Breaks)

In my previous adventures I found that most local LLM storytelling engines stall, not because the model is slow but because everything around it is:

- Prompt context gets bloated with full history.

- Token limits are hit before the first decision point.

- Threads spin up blocking processes with arbitrary timeouts.

I learned this the hard way building Noir Adventures, a AIDungeon style system where every player action feeds a cinematic AI narrator and rolls for events and interactions. Think Dungeons and Dragons meets AI story telling in real-time.

⚙️ Our Bottlenecks (and Fixes)

1. Prompt Length ≠ Token Safety

🔧 My Fix: I switched from string truncation to token-aware trimming using

llama_cppandtransformerstokenizers. Memory now clips oldest turns based on actual token count, not guesswork.

2. ThreadPoolExecutor Isn’t Made for LLMs

🧵 My Fix: Replaced

ThreadPoolExecutorwithasyncio.to_thread()for async model calls. I also added a graceful timeout fallback that retries with reducedmax_tokens. I have no idea if this is best, but it works very well currently.

3. Context Isn’t Everything—Flow Is

Instead of dumping the whole conversation each turn, I:

- Inject only the last 5–7 turns of memory.

- Pull flavor and tone dynamically from the adventure setup (e.g. “a smoky jazz bar in the rain”).

- Avoid repetition by rephrasing memory into compressed narrative seeds.

🧠 Result: The AI sounds consistent and aware, without burning 2,000 tokens to remember “you walked into a bar.”

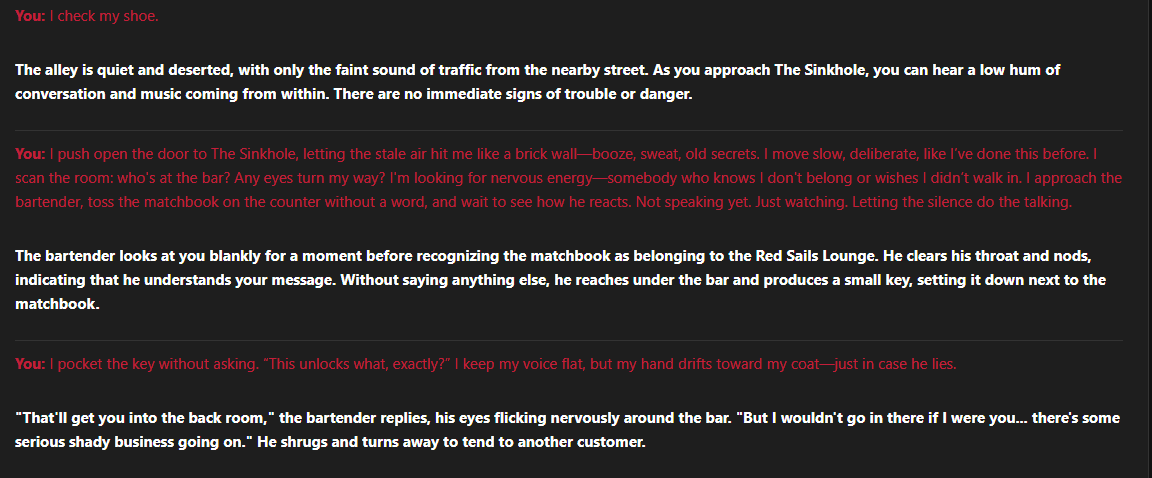

Here's an example.

🧪 Engineering Storytelling Like Infrastructure

The biggest takeaway?

Narrative AI isn’t about bigger models—it’s about treating story flow like an API call: fast, contextual, and scoped.

By optimizing the architecture around the LLM, not just the prompt—we made a local RPG engine feel reactive, immersive, and alive.

💬 Want to explore the system?

Noir Adventures is an experimental project—part of an ongoing investigation into local language models and responsive narrative engines.

Due to current legal constraints in the United States, along with other personal legal agreements, I am not releasing, monetizing, or distributing this system publicly.

This work is shared strictly for research, prototyping, and collaboration among developers and creative engineers exploring the edge of narrative AI.

If you're working on similar systems—or just curious about how to build them—I’d love to trade notes.

Author: Will Blew, SARVIS AI

Add a comment!

Important legal note: None of the statements in my blog are supoorted or endorsed by Akamai Technologies Inc unless explicitly stated.